Parisa Farmanifard

Welcome to my webpage! My name is Parisa Farmanifard, a Ph.D. student in the Department of Computer Science and Engineering at Michigan State University, where I conduct research in the iPRoBe Lab under the guidance of Dr. Arun Ross. I also earned my M.S. in Computer Science from MSU. My research focuses on biometrics, particularly iris recognition and presentation attack detection, with a growing emphasis on integrating foundation models (FMs) and large language models (LLMs) into biometric systems.

Publications

[2025] M. Mitcheff, A. Hossain, S. Webster, S.K. Karim, K. Roszczewska, J. Tapia, F. Stockhardt, J. Gonzalez-Soler, J.-Y. Lim, M. Pollok, F. Kreuzer, C. Wang, L. Li, F. Guo, J. Gu, D. Pal, P. Farmanifard, et al., "Iris Liveness Detection Competition (LivDet-Iris) – The 2025 Edition", Proc. of IEEE International Joint Conference on Biometrics (IJCB), 2025. [Competition]

[2025] Redwan Sony, P. Farmanifard, Arun Ross, Anil K Jain, "Foundation versus Domain-specific Models: Performance Comparison, Fusion, and Explainability in Face Recognition", IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), (Honolulu, Hawai'i), October 2025.

[2025] Redwan Sony, P. Farmanifard, Hamzeh Alzwairy, Nitish Shukla, Arun Ross, "Benchmarking Foundation Models for Zero-Shot Biometric Tasks", [Under Review].

[2025] Rasel Ahmed Bhuyian, P. Farmanifard, Renu Sharma, Andrey Kuehlkamp, Aidan Boyd, Patrick J Flynn, Kevin W Bowyer, Arun Ross, Dennis Chute, Adam Czajka, "Beyond Mortality: Advancements in Post-Mortem Iris Recognition through Data Collection and Computer-Aided Forensic Examination", IEEE Transactions on Biometrics, Behavior, and Identity Science.

[2024] P. Farmanifard and Arun Ross, "ChatGPT Meets Iris Biometrics", Proc. of International Joint Conference on Biometrics (IJCB), (Buffalo, USA), September 2024 [Oral].

[2024] P. Farmanifard and Arun Ross, "Iris-SAM: Iris Segmentation Using a Foundation Model", Proc. of 4th International Conference on Pattern Recognition and Artificial Intelligence (ICPRAI), (Jeju Island, South Korea), July 2024 [Oral].

Projects

Contact Lens Detection

Currently, working on improving the detection of contact lenses in iris images to prevent fraud in iris recognition systems. Specifically, I use deep learning models to distinguish between natural irises and those altered by clear or patterned contact lenses, aiming to boost the security of these biometric systems.

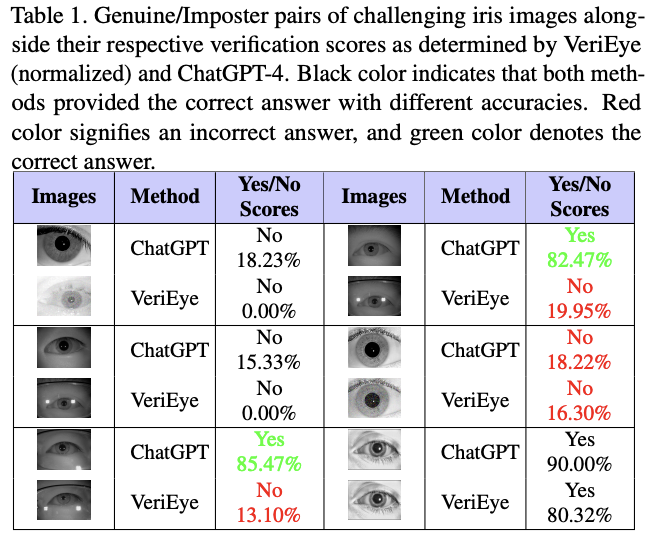

ChatGPT Meets Iris Biometrics

This study utilizes the advanced capabilities of the GPT4 multimodal Large Language Model (LLM) to explore its potential in iris recognition — a field less common and more specialized than face recognition. By focusing on this niche yet crucial area, we investigate how well AI tools like ChatGPT can understand and analyze iris images. Through a series of meticulously designed experiments employing a zero-shot learning approach, the capabilities of ChatGPT-4 was assessed across various challenging conditions including diverse datasets, presentation attacks, occlusions such as glasses, and other real-world variations. The findings convey ChatGPT-4’s remarkable adaptability and precision, revealing its proficiency in identifying distinctive iris features, while also detecting subtle effects like makeup on iris recognition. A comparative analysis with Gemini Advanced – Google’s AI model – highlighted ChatGPT-4’s better performance and user experience in complex iris analysis tasks. This research not only validates the use of LLMs for specialized biometric applications but also emphasizes the importance of nuanced query framing and interaction design in extracting significant insights from biometric data. Our findings suggest a promising path for future research and the development of more adaptable, efficient, robust and interactive biometric security solutions.

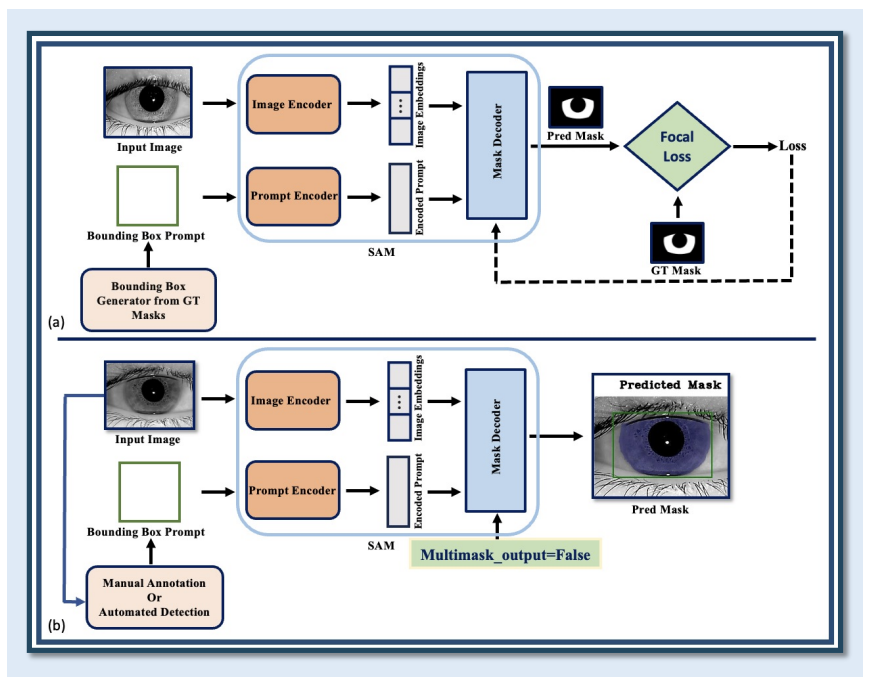

Iris-SAM: Iris Segmentation Using a Foundation Model

Iris segmentation is a critical component of an iris biometric system and it involves extracting the annular iris region from an ocular image. In this work, we develop a pixel-level iris segmentation model from a foundation model, viz., Segment Anything Model (SAM), that has been successfully used for segmenting arbitrary objects. The primary contribution of this work lies in the integration of different loss functions during the fine-tuning of SAM on ocular images. In particular, the importance of Focal Loss is borne out in the fine-tuning process since it strategically addresses the class imbalance problem (i.e., iris versus noniris pixels). Experiments on ND-IRIS-0405, CASIA-Iris-Interval-v3, and IIT-Delhi-Iris datasets convey the efficacy of the trained model for the task of iris segmentation. For instance, on the ND-IRIS-0405 dataset, an average segmentation accuracy of 99.58% was achieved, compared to the best baseline performance of 89.75%.

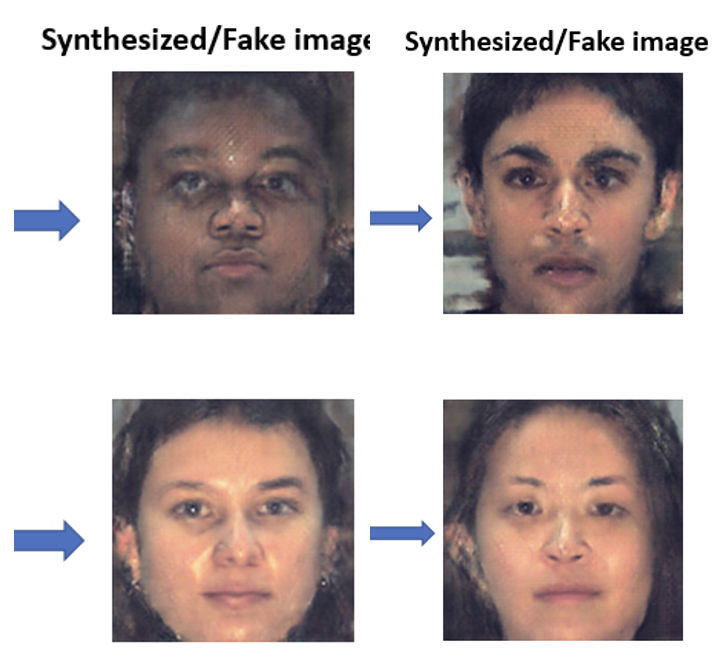

Cross-Spectral Face Recognition Using Generative Adversarial Networks (GANs)

Cross-spectral Face Recognition (CFR) refers to the task of identifying matching features between two images of the same person captured in two different spectral bands. This involves recognizing faces across different imaging modalities such as visible, infrared, or depth maps. In this study, we used ArcFace to calculate match scores between images taken from different spectral bands. We first computed similarity scores between original images of the same identity captured in SWIR and VIS, and then compared them with similarities between synthesized VIS images and the original VIS/SWIR images using SG-GAN. Our results showed that using ArcFace led to the best performance for both synthesized and original images. This was because the similarity scores were well-separated in the histogram, indicating high discrimination between different identities. However, the performance of outdoor image analysis can be improved by applying image enhancement methods that address issues such as low contrast, noise, or illumination variations.

Poster Presentations

- "Eye Contact: A Dual-Stage Approach for Automated Contact Lens Detection", NotreDame University 2025 (UND).

- "ChatGPT Meets Iris Biometrics", IJCB 2024 (Buffalo, NY).

- "Iris-SAM: Iris Segmentation Using a Foundation Model", Engineering Graduate Research Symposium 2024 (MSU).

- "Cross-Spectral Face Recognition Using a Semantic-Guided GAN", Engineering Graduate Research Symposium 2023 (MSU).

Education

- [2021 - Present] Doctor of Philosophy (Ph.D.), Computer Science, Michigan State University, USA.

- [2021 - 2023] Master of Science (MS), Computer Science, Michigan State University, USA.

- [2013 - 2017] Bachelor of Engineering (B.E.), IT Engineering, MU.

Internship Experience

- In the summer of 2023, I had an internship experience at American Electric Power (AEP), working with their emerging technology group. My role involved a project on "drone-based pole detection", collaborating closely with a computer vision and machine learning group. During my internship, I have worked with various detection methods, including Detectron2, Yolov4, Yolov8, and the Segment Anything Model (SAM), primarily focusing on drone images.